14 reasons why #DeleteFacebook

Over the course of August 2020, I shared why I was leaving Facebook on Facebook. My intent was to set a deadline for exchanging contact information and to raise awareness of the harm Facebook inflicts.

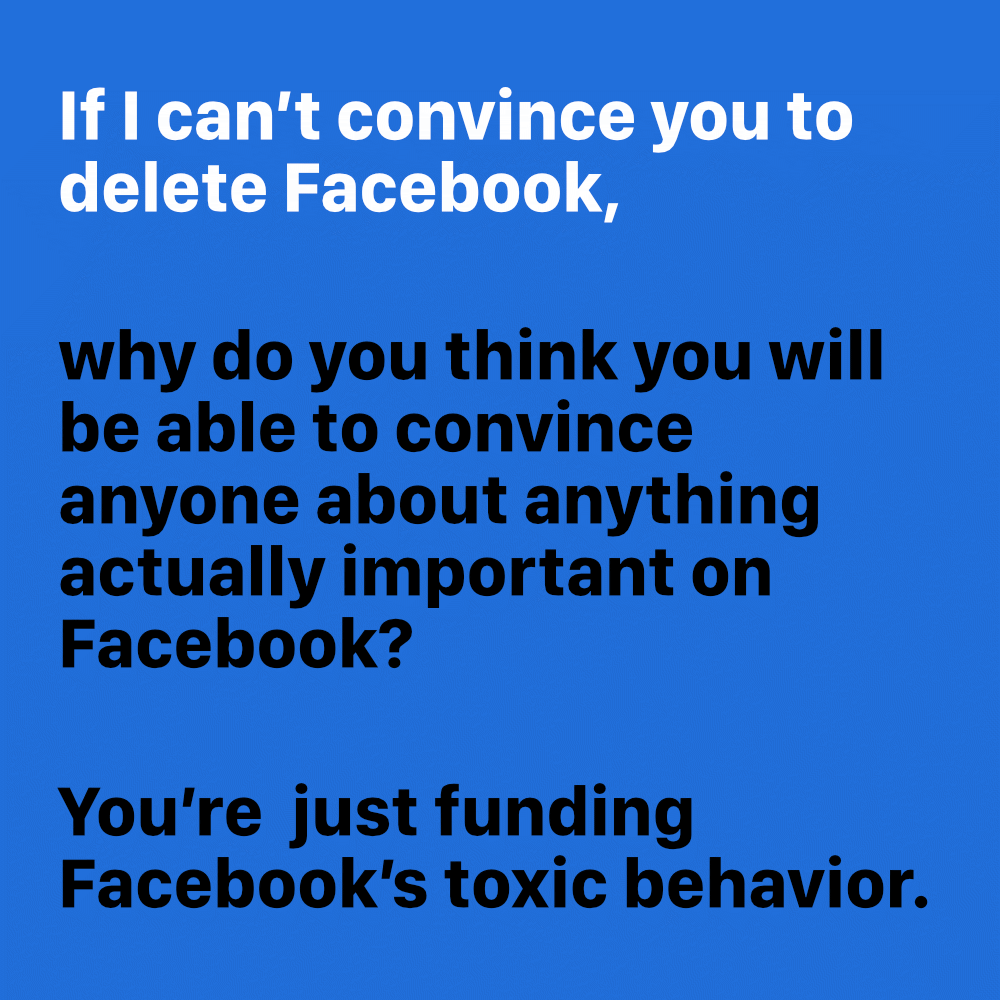

While I am unaware of anyone being convinced by my campaign, I hope more people join me in deleting Facebook. You are free to share and remix these images.

I captioned each image post with:

I’m deleting my Facebook account on September 1, but I’m not unfriending you. Let’s exchange contact info here: https://www.jeremiahlee.com/posts/delete-facebook/

Jump to entry: 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14

![“Time and again, Facebook brazenly reveals its danger as an out-of-control, largely unchecked monopoly. The fact it is allowing demonstrably false political ads designed to suppress the vote is the latest example of the threat the company poses.” Mark Stanley, director of communications for Demand Progress. “People everywhere are angry that Facebook is profiting by allowing candidates to place demonstrably false political ads that sabotage our democracy by spreading disinformation about vote-by-mail during a pandemic.” Trent Lange, President of the California Clean Money Action Fund. “[Facebook] is being weaponized to spread hate and violence, harm vulnerable communities, and undermine our democracy…” Vanita Gupta, chief executive of the Leadership Conference on Civil and Human Rights. If Facebook won’t change, then we must change. The only way to get Facebook’s attention is to stop using it because that’s the only way Facebook loses revenue.](entry-9.png)

Photo of Mark Zuckerberg © Anthony Quintano. Used under a Creative Commons Attribution 2.0 Generic license.